views

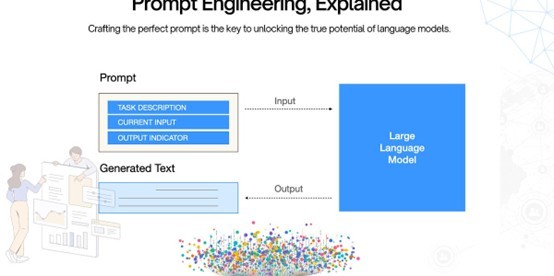

The swift progression of artificial intelligence has resulted in the creation of language models capable of processing and producing language akin to that of humans. These models find wide-ranging applications, ranging from text summarization and language translation to chatbots. While a badly conceived prompt may provide results that are unclear or unrelated, a well-crafted question can generate correct and instructive replies. With language models becoming common, it's critical to comprehend the principles of creating efficient prompts that maximize their capabilities. We will examine the prompt engineering concepts in this post to create language model prompts that work well and help both developers and users make the most out of them.

Understanding Language Models

A kind of artificial intelligence called a language model is made to process and produce language that is similar to that of humans. It learns patterns and connections between phrases, sentences, and words through substantial practice on huge quantities of text data. The model can now anticipate the following word in a sequence based on the context of the words that came before it thanks to this training.

The two main categories of language models are discriminative and generative models. Differentiative models, like sentiment analysis and language translation, categorize or anticipate a certain output based on the input text, whereas generative methods, like GPT-3 and BERT, produce text according to the input prompt.

Defining Clear Objectives

An essential first step in creating language model prompts that work is to clearly define your goals. A well-defined goal gives the model a precise path to follow, guaranteeing that the resultant output satisfies the required standards. It is crucial to establish the job, the desired outcome, and the key performance indicators (KPIs) in order to set a clear aim. For example, if the objective is to create a product description, the KPIs may be the presence of particular characteristics and a particular tone, and the intended output could be a succinct and informative paragraph. By keeping the language model's attention on the most important parts of the job, a clear purpose lowers the possibility of irrelevant or off-topic replies. Furthermore, with well-defined objectives, engineers may correctly assess the model's performance, making it easier to refine and improve the prompts over time.

Another helpful tip for preventing ambiguity and vagueness in the prompt is to have a clearly stated purpose. When prompts are ambiguous, the language model finds it difficult to comprehend the intended outcome, which might result in answers that are unclear or inconsistent. Developers may make sure that the language model is working toward a defined purpose instead of guessing or evaluating things by giving it a clear target. In contrast, the challenge "Write a 500-word fantasy story about an individual who discovers an unseen planet" gives the language model a defined goal to work towards. As an example, "Write a story about a character" is too vague. Developers may save time and money by crafting prompts that produce high-quality replies from language models by outlining their goals.

Using Specific Content

Providing specific and relevant context is essential for crafting effective prompts for language models. Context refers to the background information, assumptions, and constraints that shape the language model's understanding of the task. A well-crafted context helps the language model to generate responses that are accurate, informative, and relevant to the task at hand. To provide effective context, developers should consider the following factors: domain knowledge, tone and style, and specific requirements.

Domain knowledge refers to the specific area of expertise or topic that the language model should be familiar with. For instance, a prompt about medical diagnosis should provide context about the relevant medical concepts and terminology. Tone and style refer to the language model's tone, voice, and language usage, which should align with the desired output. Specific requirements refer to any constraints or guidelines that the language model should follow, such as word count, format, or specific keywords.

Additionally, providing context can help to reduce the risk of bias and inaccuracies in the generated responses. By specifying the relevant domain knowledge, tone, and style, developers can ensure that the language model generates responses that are fair, unbiased, and respectful. By incorporating specific and relevant context into the prompt, developers can unlock the full potential of language models and achieve high-quality results.

Continuous Refining

Continuously refining and iterating on prompts is crucial for achieving optimal results from language models. As language models are trained on large datasets, they can be sensitive to subtle changes in prompts, and even small refinements can significantly impact the quality of the generated responses. To refine and iterate on prompts, developers should regularly evaluate the performance of the language model, identify areas for improvement, and make adjustments to the prompt accordingly.

This process involves testing different variations of the prompt, analyzing the results, and incorporating feedback from users or evaluators. By continuously refining and iterating on prompts, developers can fine-tune the language model's performance, improve the accuracy and relevance of the generated responses, and ensure that the model remains aligned with the desired objectives. This iterative process is essential for achieving high-quality results and unlocking the full potential of language models.

Conclusion

Crafting effective prompts for language models is a nuanced and multifaceted task that requires careful consideration of various factors. By following the guidelines outlined in this document, developers can create high-quality prompts that elicit accurate, informative, and relevant responses from language models. From defining clear objectives to providing specific context, and from using natural language to continuously refining and iterating, each step plays a critical role in unlocking the full potential of language models.

To stay ahead of the curve, developers can leverage innovative tools and platforms like Vectorize.io, which provides a suite of AI-powered prompt engineering tools to help developers optimize their prompts and achieve better results from language models. By combining the guidelines outlined in this document with the cutting-edge technology of Vectorize.io, developers can take their language model development to the next level and unlock new possibilities for AI-driven innovation.

Comments

0 comment