views

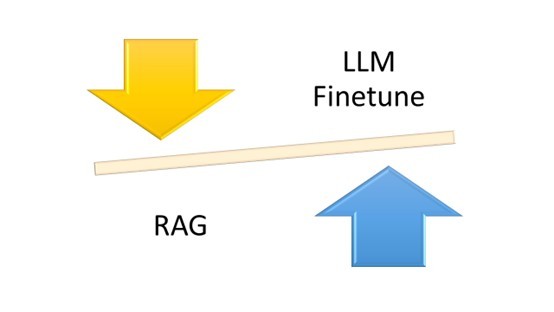

Artificial Intelligence (AI) has made remarkable progress, with recent advancements in two key areas: Retrieval Augmented Generation (RAG) and LLM Fine-Tuning techniques. These cutting-edge developments have significantly improved the performance of Language Models (LMs). In fact, studies indicate that RAG has led to a 35% increase in response accuracy, while LLM Fine-Tuning techniques have achieved a 50% reduction in error rates. Understanding these methodologies is crucial, as they offer distinct advantages and trade-offs.

This blog post will help you understand the methodologies, benefits, limitations, and decision-making criteria to assist you in choosing the most suitable approach for your specific need.

The Detailed Introduction of RAG and LLM Fine-Tuning

Two critical emerging methodologies are Retrieval-Augmented Generation (RAG) and fine-tuning. Both approaches aim to improve the performance and applicability of language models, but they do so in fundamentally different ways.

What is Retrieval Augmented Generation?

RAG, short for Retrieval-Augmented Generation, is an LLM learning technique that merges the retrieval mechanisms and generative capabilities to enhance the performance of large language models. RAG is a framework developed by OpenAI that combines a language model with a retrieval-based system. It uses a pre-trained language model to generate responses and a retrieval-based system to retrieve relevant information from an extensive knowledge base.

How Retrieval-Augmented Generation (RAG) Works?

The RAG framework combines a retriever and a generator. The retriever component selects relevant documents from a knowledge base based on the input query. Then, the generator, which is a pre-trained language model, generates the response using the retrieved information.

RAG uses a query mechanism to retrieve relevant information from one or more external datasets or knowledge bases. This retrieved information and the original prompt are fed into a generative AI model, which integrates this external data into generating its response. This process allows the model to produce answers that are not just based on its pre-trained knowledge but also potentially more recent and relevant external data.

Applications of RAG

RAG finds versatile applications across various domains, enhancing AI capabilities in different contexts:

Chatbots and AI Assistants

RAG-powered systems excel in question-answering scenarios, providing context-aware and detailed answers from extensive knowledge bases. These systems enable more informative and engaging interactions with users.

Education Tools

RAG can significantly improve educational tools by offering students access to answers, explanations, and additional context based on textbooks and reference materials. This facilitates more effective learning and comprehension.

Legal Research and Document Review

Legal professionals can leverage RAG models to streamline document review processes and conduct efficient legal research. RAG assists in summarizing statutes, case law, and other legal documents, saving time and improving accuracy.

Medical Diagnosis and Healthcare

RAG models serve as valuable tools for doctors and medical professionals in healthcare. They provide access to the latest medical literature and clinical guidelines, aiding in accurate diagnosis and treatment recommendations.

Language Translation with Context

RAG enhances language translation tasks by considering the context in knowledge bases. This approach results in more accurate translations, accounting for specific terminology and domain knowledge, which is particularly valuable in technical or specialized fields.

What is Fine-Tuning LLM?

LLM stands for Language Model. It refers to a model trained on a large corpus of text data to understand and generate human-like text based on the input. Fine-tuning LLM refers to making minor adjustments or modifications to a system or model that has already been trained on a larger dataset. In machine learning, fine-tuning typically involves taking a pre-trained neural network and adjusting its parameters or architecture slightly to adapt it to a specific task or dataset.

How Fine-Tuning LLMs Work?

The principle underpinning LLM fine-tuning is based on taking a pre-trained base model and further teaching it using a smaller, domain-relevant, task-specific specialized corpus. During this phase, slight adjustments are made to the model’s internal parameters (weights) to reduce the prediction error in the new, specific context.

LLMs are typically trained using unsupervised learning on a large dataset containing text from various sources. Models like GPT-3.5 are trained using a transformer architecture with multiple layers of self-attention mechanisms. The LLM receives both the user query and the pertinent data. The LLM uses its training data and new knowledge to provide improved replies. An outline of the procedure is given in the ensuing sections.

Applications of Fine-Tuning LLM

Here are some notable applications of fine-tuning LLMs:

Text Classification

Fine-tuning LLMs can be used for text classification tasks, where the goal is to categorize text into predefined classes or labels. By training the LLM on labeled examples from a specific domain, it can learn to classify text based on the patterns and features relevant to that domain. This approach has been successfully applied in sentiment analysis, spam detection, and topic classification.

Named Entity Recognition (NER)

NER involves identifying and classifying named entities by identifying names, locations, organizations, and dates within the text. Fine-tuning LLMs can improve the performance of NER systems by training the model on labeled data specific to the desired entity types. This allows the model to learn to recognize and extract named entities accurately in a given domain or language.

Text Summarization

LLMs can be fine-tuned for text summarization tasks, where the objective is to generate concise summaries of longer texts. The fine-tuned LLM can learn to generate abstractive or extractive summaries that capture the critical information from the source text by training the model on pairs of source documents and their corresponding summaries.

Machine Translation

Fine-tuning LLMs has been applied to improve machine translation systems. By training the LLM on parallel corpora of source and target language sentences, the model can learn to generate more accurate and fluent translations. Fine-tuning can help adapt the model to specific language pairs or domains, improving translation quality.

Dialogue Systems

Fine-tuning LLMs can improve dialogue systems by training the model on conversational data. This enables the LLM to generate more contextually appropriate and coherent responses in conversational settings.

Key Differences Between RAG and LLM Fine-Tuning

Conclusion

Vectorize explores two distinct methodologies within the realm of natural language processing: Retrieval-Augmented Generation (RAG) and fine-tuning Language Models (LLMs). RAG harnesses the capabilities of a pre-trained language model augmented with a retrieval system, utilizing an extensive knowledge base to craft responses that are both accurate and highly relevant to the context. Conversely, fine-tuning LLMs entails customizing a pre-existing language model for particular tasks or domains. This is achieved by training the model on data specific to the task at hand, thereby equipping it to produce text that aligns with the learned patterns. Grasping the nuances and potential uses of RAG and LLM fine-tuning allows Vectorize's researchers and developers to judiciously select the most appropriate strategy for their specific project needs.

Comments

0 comment